Hello. I need a web page for a blog about GPT4. Could you please write a ~2000 word article in a conversational tone, avoiding verbosity, about the techniques behind a generative pre-trained transformer. Please pitch it at an intelligent high-school child or soccer mum, not a tech-head. Provide placeholders for pictures to break up the text, and please suggest prompts that I can use to generate the pictures in one of the new AI text-to-image platforms. The prompts should be located where the picture should appear. Please avoid formatting anything as “code”; it messes with WordPress. Just plain text will do fine. Thanks.

In response to this prompt, GPT4 wrote the page below.

The picture prompts might have worked for a human, but generally failed to produce satisfactory results when fed to a graphics AI. GPT4 seems to overestimate the capabilities of a graphics AI, and the graphics AI in turn sucks badly at schematic diagrams, counting, and text. GPT4 seems not to know how badly the graphics engines currently perform with text, but will soon learn this as integrating these technologies will obviously be a high priority for web content producers. Two of the images below were composed with visual prompts from Google Images plus the suggested text, and even then, with the extra help, fell well short of conveying the intended meaning. I stopped using the prompts halfway through as it was increasingly unlikely that sensible results would be obtained.

The article is factually incorrect in suggesting that GPT4 powers all chatbots; the techniques might apply generally, but GPT4 has not been incorporated into all chat platforms.

The page also engaged in advertising, which was not really part of the instructions, suggesting that the language model has not been trained on a neutral dataset. It seems very likely that some additional company-friendly training has been added to the initial trained model, highlighting how much influence the producers of GPT4 have over what it says and thinks.

Apart from that, the general explanation on the underlying methoodology appears satisfactory, but I am no expert, so comments are welcome.

Title: Understanding GPT-4: The AI That Powers Your Favorite Chatbot

Introduction

Hey there! Have you ever wondered how chatbots like Siri, Alexa, or even your favorite AI-powered video game characters can understand what you’re saying and respond in a way that makes sense? It might seem like magic, but it’s actually the result of some pretty amazing technology called GPT-4. This article will explain what GPT-4 is and how it works, in a way that’s easy to understand. So, let’s dive in!

[Picture Prompt: A friendly chatbot with speech bubbles containing questions and answers]

What is GPT-4?

GPT-4 stands for “Generative Pre-trained Transformer 4,” which might sound a little intimidating, but don’t worry – we’ll break it down into simpler terms. GPT-4 is a type of artificial intelligence (AI) that’s been designed to understand and generate human-like text. It’s called “generative” because it can create new sentences, paragraphs, or even whole articles (like this one!) based on the information it’s been trained on.

[Picture Prompt: A robot sitting at a desk, writing an article on a laptop]

How does GPT-4 work?

GPT-4 works by using a special kind of AI called a “transformer.” Transformers are designed to analyze and understand the structure of language, which allows them to make sense of the words we use and how they fit together. To understand how transformers work, let’s take a look at the three main components that make them up:

Attention Mechanism

Positional Encoding

Feed-Forward Neural Networks

[Picture Prompt: Three separate gears labeled “Attention Mechanism,” “Positional Encoding,” and “Feed-Forward Neural Networks” working together] (Not achieved by graphics AI, despite being fed an image that contained three cogs)

Attention Mechanism

The attention mechanism is the part of the transformer that helps it figure out which words in a sentence are the most important. Imagine you’re reading a sentence like “The cat chased the mouse.” The attention mechanism would help the AI understand that “cat” and “mouse” are the most important words in this sentence, and “chased” tells us what happened between them.

[Picture Prompt: A magnifying glass highlighting the words “cat,” “chased,” and “mouse” in a sentence] (Not achieved by graphics AI)

Positional Encoding

Positional encoding is what helps the transformer understand the order of the words in a sentence. This is important because, in many languages (like English), the order of words can completely change the meaning of a sentence. For example, “The cat chased the mouse” has a very different meaning than “The mouse chased the cat.” Positional encoding helps the AI keep track of the order of words so that it can understand the meaning of the sentence correctly.

[Picture Prompt: Two sentences side by side, “The cat chased the mouse” and “The mouse chased the cat,” with arrows showing the different meanings] (LOL)

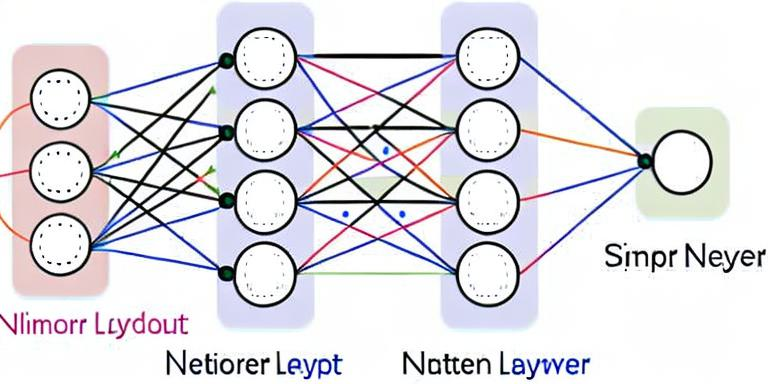

Feed-Forward Neural Networks

Feed-forward neural networks are the part of the transformer that helps it make predictions about what words should come next in a sentence. These networks are made up of layers of artificial neurons, which are designed to mimic the way our brains process information. By analyzing the patterns in the text it’s been trained on, the neural network can generate new sentences that sound like they were written by a human.

[Picture Prompt: A simplified diagram of a neural network with input, hidden, and output layers] (LOL)

Training GPT-4: How does it learn?

To become really good at understanding and generating text, GPT-4 needs to be trained on a huge amount of data. The more data it’s trained on, the better it gets at understanding language

patterns and creating realistic sentences. To train GPT-4, researchers at OpenAI fed it massive amounts of text from the internet, including books, articles, and websites. By studying all of this data, GPT-4 learned how to understand and generate text in a wide variety of topics and styles.

[Picture Prompt: A library filled with books and a computer downloading information from the internet]

But what about mistakes?

Just like humans, GPT-4 isn’t perfect. It can sometimes make mistakes or generate text that doesn’t quite make sense. This is because it’s trying to predict what comes next based on the patterns it’s seen in the data it was trained on. If it hasn’t seen a specific pattern before, or if the pattern is too complex for it to understand, it might generate something that sounds a little off. That being said, GPT-4 is constantly improving as it’s exposed to more and more data.

[Picture Prompt: A puzzled robot looking at a piece of paper with nonsensical text]

How is GPT-4 used in the real world?

GPT-4 has many practical applications, from powering chatbots like the ones we mentioned earlier to helping people write better emails or essays. It can even be used to create realistic-sounding dialogue for video games, movies, or TV shows! Because it’s so versatile, GPT-4 has quickly become an important tool for businesses, educators, and creators alike.

[Picture Prompt: A collage of different applications of GPT-4, including a chatbot, a person writing an email, and a video game character]

Ethical considerations

As with any powerful technology, there are ethical concerns about how GPT-4 and similar AI systems could be used. For example, some people worry that AI-generated text could be used to create fake news or spread misinformation. Others are concerned about the potential loss of jobs as AI becomes more advanced and takes over tasks that used to be done by humans. It’s important for researchers, companies, and governments to work together to ensure that AI like GPT-4 is used responsibly and for the benefit of everyone.

[Picture Prompt: A balance scale with “Benefits” on one side and “Ethical Concerns” on the other, with people discussing around it]

Conclusion

GPT-4 is an incredible example of how far AI has come in recent years. Its ability to understand and generate human-like text has opened up a world of possibilities, from making our lives easier with chatbots to sparking our creativity with AI-generated stories. As long as we continue to use this technology responsibly and consider the ethical implications, the future of AI like GPT-4 looks bright!

[Picture Prompt: A shining light bulb with a friendly robot and a person standing next to it, symbolizing the bright future of AI]

And that’s it! Now you have a basic understanding of what GPT-4 is and how it works. We hope this article has given you some insight into the fascinating world of AI and the technology that powers some of your favorite digital helpers. Happy chatting!